GPS Tracking Solutions with IoT Technology has Revolutionized Industry

HealthPresso to Raise $1 Million in Pre-Series A Funding Round

AI Futures can be Considered for Custom ERP Software

Fashion Startup The Pant Project Raises $4.25M in Series A Financing

Building Privacy-First Consumer Experiences with Technology – Affinidi

How to Fix Amazon Fire Stick Remote Not Working

Building Your First Deep Learning Model: A Step-by-Step Guide

Introduction to Deep Learning

Deep learning is a subset of machine learning, which itself is a subset of artificial intelligence (AI). Deep learning models are inspired by the structure and function of the human brain and are composed of layers of artificial neurons. These models are capable of learning complex patterns in data through a process called training, where the model is iteratively adjusted to minimize errors in its predictions.

In this blog post, we will walk through the process of building a simple artificial neural network (ANN) to classify handwritten digits using the MNIST dataset.

Understanding the MNIST Dataset

The MNIST dataset (Modified National Institute of Standards and Technology dataset) is one of the most famous datasets in the field of machine learning and computer vision. It consists of 70,000 grayscale images of handwritten digits from 0 to 9, each of size 28×28 pixels. The dataset is divided into a training set of 60,000 images and a test set of 10,000 images. Each image is labeled with the corresponding digit it represents.

Downloading the Dataset

We will use the MNIST dataset provided by the Keras library, which makes it easy to download and use in our model.

Step 1: Importing the Required Libraries

Before we start building our model, we need to import the necessary libraries. These include libraries for data manipulation, visualization, and building our deep learning model.

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import tensorflow as tf

from tensorflow import keras

- numpy and pandas are used for numerical and data manipulation.

- matplotlib and seaborn are used for data visualization.

- tensorflow and keras are used for building and training the deep learning model.

Step 2: Loading the Dataset

The MNIST dataset is available directly in the Keras library, making it easy to load and use.

(X_train, y_train), (X_test, y_test) = keras.datasets.mnist.load_data()

This line of code downloads the MNIST dataset and splits it into training and test sets:

- X_train and y_train are the training images and their corresponding labels.

- X_test and y_test are the test images and their corresponding labels.

Step 3: Inspecting the Dataset

Let’s take a look at the shape of our training and test datasets to understand their structure.

print(X_train.shape)

print(X_test.shape)

print(y_train.shape)

print(y_test.shape)

- X_train.shape outputs (60000, 28, 28), indicating there are 60,000 training images, each of size 28×28 pixels.

- X_test.shape outputs (10000, 28, 28), indicating there are 10,000 test images, each of size 28×28 pixels.

- y_train.shape outputs (60000,), indicating there are 60,000 training labels.

- `y_test

.shapeoutputs(10000,)`, indicating there are 10,000 test labels.

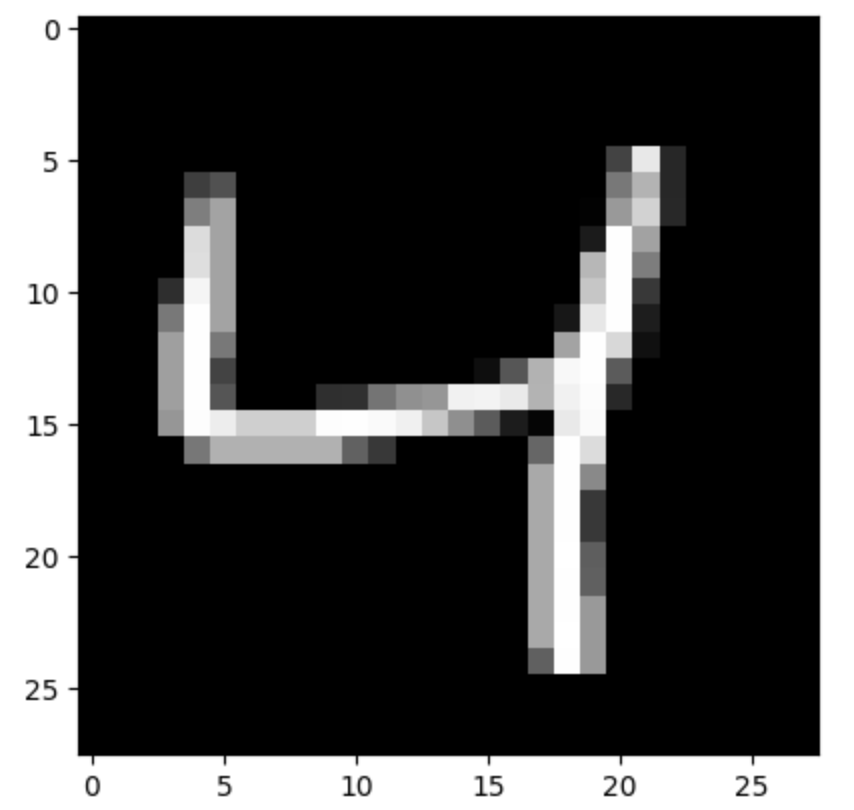

To get a better understanding, let’s visualize one of the training images and its corresponding label.

plt.imshow(X_train[2], cmap='gray')

plt.show()

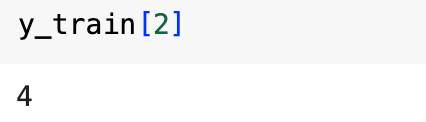

print(y_train[2])

- plt.imshow(X_train[2], cmap=’gray’) displays the third image in the training set in grayscale.

- plt.show() renders the image.

- print(y_train[2]) outputs the label for the third image, which is the digit the image represents.

Step 4: Rescaling the Dataset

Pixel values in the images range from 0 to 255. To improve the performance of our neural network, we rescale these values to the range [0, 1].

X_train = X_train / 255

X_test = X_test / 255

This normalization helps the neural network learn more efficiently by ensuring that the input values are in a similar range.

Step 5: Reshaping the Dataset

Our neural network expects the input to be a flat vector rather than a 2D image. Therefore, we reshape our training and test datasets accordingly.

X_train = X_train.reshape(len(X_train), 28 * 28)

X_test = X_test.reshape(len(X_test), 28 * 28)

- X_train.reshape(len(X_train), 28 * 28) reshapes the training set from (60000, 28, 28) to (60000, 784), flattening each 28×28 image into a 784-dimensional vector.

- Similarly, X_test.reshape(len(X_test), 28 * 28) reshapes the test set from (10000, 28, 28) to (10000, 784).

Step 6: Building Our First ANN Model

We will build a simple neural network with one input layer and one output layer. The input layer will have 784 neurons (one for each pixel), and the output layer will have 10 neurons (one for each digit).

ANN1 = keras.Sequential([

keras.layers.Dense(10, input_shape=(784,), activation='sigmoid')

])

- keras.Sequential() creates a sequential model, which is a linear stack of layers.

- keras.layers.Dense(10, input_shape=(784,), activation=’sigmoid’) adds a dense (fully connected) layer with 10 neurons, input shape of 784, and sigmoid activation function.

Next, we compile our model by specifying the optimizer, loss function, and metrics.

ANN1.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

- optimizer=’adam’ specifies the Adam optimizer, which is an adaptive learning rate optimization algorithm.

- loss=’sparse_categorical_crossentropy’ specifies the loss function, which is suitable for multi-class classification problems.

- metrics=[‘accuracy’] specifies that we want to track accuracy during training.

We then train the model on the training data.

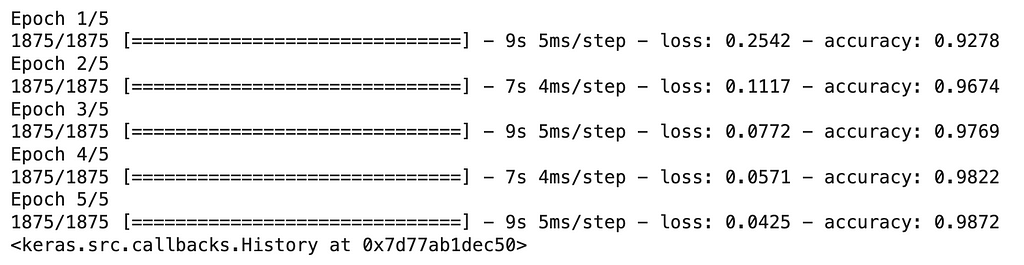

ANN1.fit(X_train, y_train, epochs=5)

- ANN1.fit(X_train, y_train, epochs=5) trains the model for 5 epochs. An epoch is one complete pass through the training data.

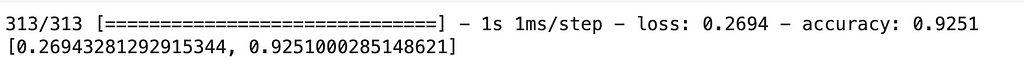

Step 7: Evaluating the Model

After training the model, we evaluate its performance on the test data.

ANN1.evaluate(X_test, y_test)

- ANN1.evaluate(X_test, y_test) evaluates the model on the test data and returns the loss value and metrics specified during compilation.

Step 8: Making Predictions

We can use our trained model to make predictions on the test data.

y_predicted = ANN1.predict(X_test)

- ANN1.predict(X_test) generates predictions for the test images.

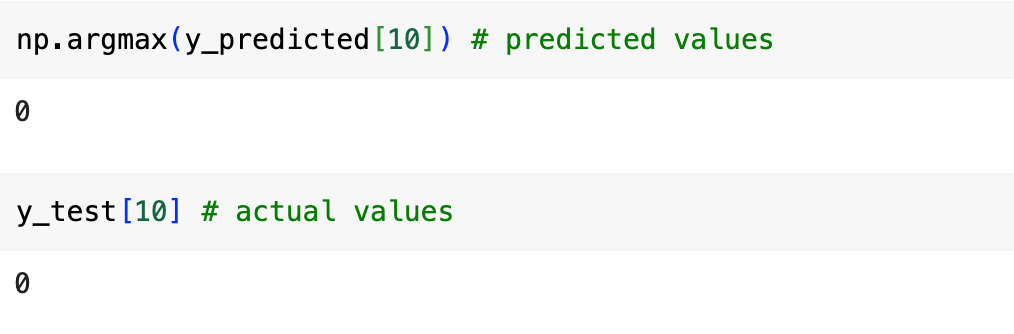

To see the predicted label for the first test image:

print(np.argmax(y_predicted[10]))

print(y_test[10])

- np.argmax(y_predicted[10]) returns the index of the highest value in the prediction vector, which corresponds to the predicted digit.

- print(y_test[10]) prints the actual label of the first test image for comparison.

Step 9: Building a More Complex ANN Model

To improve our model, we add a hidden layer with 150 neurons and use the ReLU activation function, which often performs better in deep learning models.

ANN2 = keras.Sequential([

keras.layers.Dense(150, input_shape=(784,), activation='relu'),

keras.layers.Dense(10, activation='sigmoid')

])

- keras.layers.Dense(150, input_shape=(784,), activation=’relu’) adds a dense hidden layer with 150 neurons and ReLU activation function.

We compile and train the improved model in the same way.

ANN2.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

ANN2.fit(X_train, y_train, epochs=5)

Step 10: Evaluating the Improved Model

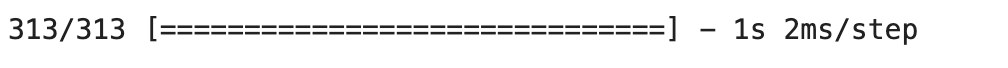

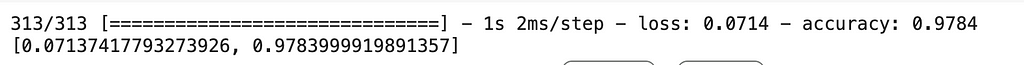

We evaluate the performance of our improved model on the test data.

ANN2.evaluate(X_test, y_test)

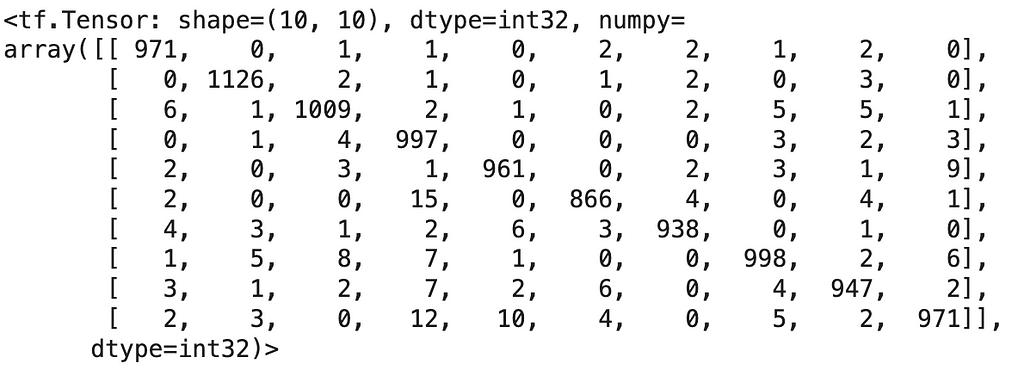

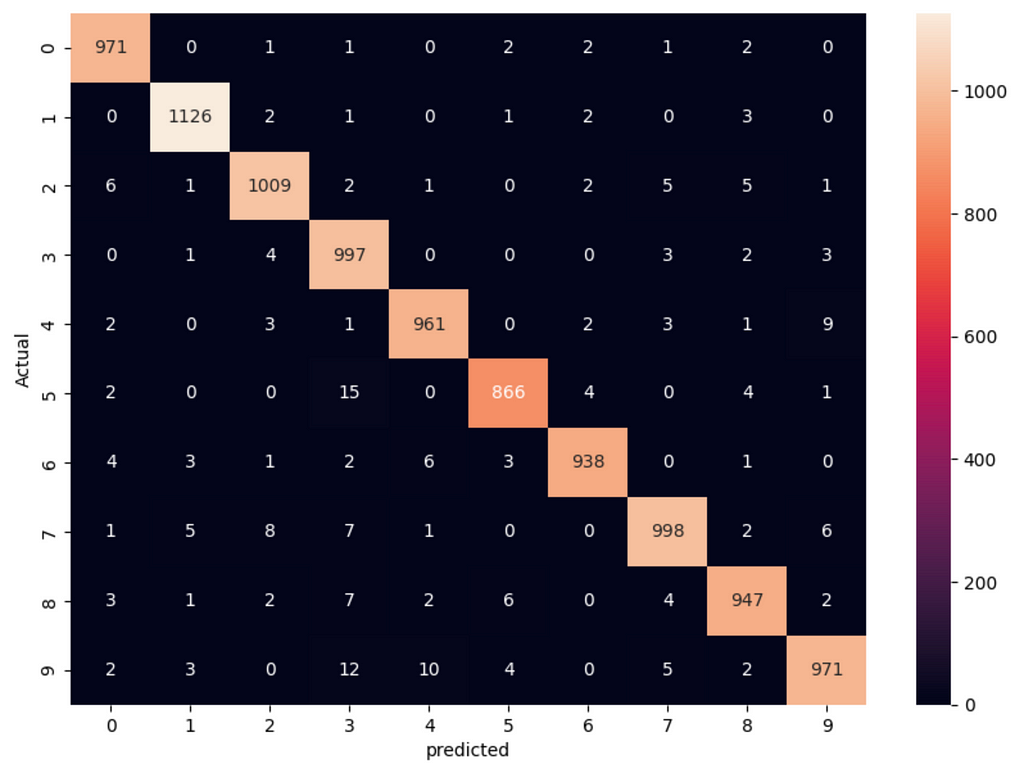

Step 11: Confusion Matrix

To get a better understanding of how our model performs, we can create a confusion matrix.

y_predicted2 = ANN2.predict(X_test)

y_predicted_labels2 = [np.argmax(i) for i in y_predicted2]

- y_predicted2 = ANN2.predict(X_test) generates predictions for the test images.

- y_predicted_labels2 = [np.argmax(i) for i in y_predicted2] converts the prediction vectors to label indices.

We then create the confusion matrix and visualize it.

cm = tf.math.confusion_matrix(labels=y_test, predictions=y_predicted_labels2)

plt.figure(figsize=(10, 7))

sns.heatmap(cm, annot=True, fmt='d')

plt.xlabel("Predicted")

plt.ylabel("Actual")

plt.show()

- tf.math.confusion_matrix(labels=y_test, predictions=y_predicted_labels2) generates the confusion matrix.

- sns.heatmap(cm, annot=True, fmt=’d’) visualizes the confusion matrix with annotations.

Conclusion

In this blog post, we covered the basics of deep learning and walked through the steps of building, training, and evaluating a simple ANN model using the MNIST dataset. We also improved the model by adding a hidden layer and using a different activation function. Deep learning models, though seemingly complex, can be built and understood step-by-step, enabling us to tackle various machine learning problems.

This brings us to the end of this article. I hope you have understood everything clearly. Make sure you practice as much as possible.

If you wish to check out more resources related to Data Science, Machine Learning and Deep Learning you can refer to my Github account.

You can connect with me on LinkedIn — RAVJOT SINGH.

I hope you like my article. From a future perspective, you can try other algorithms also, or choose different values of parameters to improve the accuracy even further. Please feel free to share your thoughts and ideas.

P.S. Claps and follows are highly appreciated.

Building Your First Deep Learning Model: A Step-by-Step Guide was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.

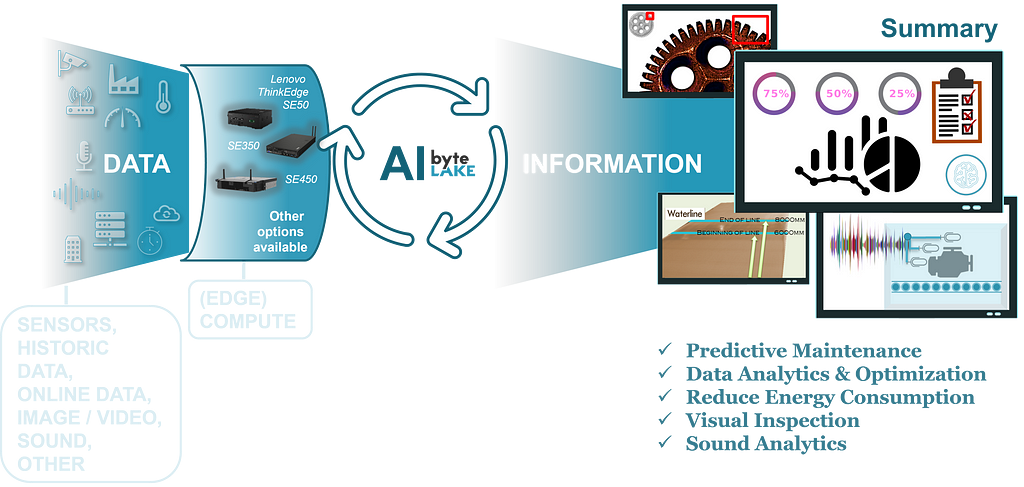

Smart Factories: Concepts and Features

Exploring how new technologies, including artificial intelligence (AI), revolutionize manufacturing processes.

A smart factory is a cyber-physical system that leverages advanced technologies to analyze data, automate processes, and learn continuously. It’s part of the Industry 4.0 transformation, which combines digitalization and intelligent automation. Here are some key features:

- Interconnected Network: Smart factories integrate machines, communication mechanisms, and computing power. They form an interconnected ecosystem where data flows seamlessly.

- Advanced Technologies: Smart factories use AI, machine learning, and robotics to optimize operations. These technologies enable real-time decision-making and adaptability.

- Data-Driven Insights: Sensors collect data from equipment, production lines, and supply chains. AI processes this data to improve efficiency, quality, and predictive maintenance.

Automation, Robots, and AI on the Factory Floor

1. Production Automation

- Robotic Arms: Robots handle repetitive tasks like assembly, welding, and material handling. They enhance precision and speed.

- Collaborative Robots: These work alongside humans, assisting with tasks like packaging, quality control, and logistics.

2. Quality Inspection

- Visual Inspection: AI-powered computer vision systems analyze images or videos to detect defects, ensuring product quality. For instance, a custom Convolutional Neural Network (CNN) can achieve 99.86% accuracy in inspecting casting products.

- Sound Analytics: AI algorithms process audio data to identify anomalies (e.g., machinery malfunctions) based on sound patterns.

3. IoT + AI: Predictive Maintenance and Energy Efficiency

- Predictive Maintenance (IoT Sensors): Connected sensors monitor equipment health. AI algorithms predict failures, allowing timely maintenance. This minimizes unplanned downtime and reduces costs.

- Energy Management and Energy Consumption Analysis: AI analyzes vast data sets to optimize energy usage. It helps reduce waste, manage various energy sources, and enhance sustainability.

- Predictive Energy Demand: AI predicts energy demand patterns, aiding efficient resource allocation.

AI-Driven Energy Management in Smart Factories

1. Real-Time Energy Optimization

- IoT Data Integration: Smart factories deploy IoT sensors across their infrastructure to collect real-time data on energy consumption. These sensors monitor machinery, lighting, HVAC systems, and other energy-intensive components.

- Weather Forecast Integration: By combining IoT data with weather forecasts, AI algorithms predict energy demand variations. For example: when a heatwave is predicted, the factory can pre-cool the facility during off-peak hours to reduce energy costs during peak demand.

2. Dynamic Energy Source Selection

- Production Schedules and Energy Sources: AI analyzes production schedules, demand patterns, and energy prices. It optimally selects energy sources (e.g., solar, grid, and battery storage) based on cost and availability. For example: during high-demand production hours, the factory might rely on grid power. At night or during low-demand periods, it switches to stored energy from batteries or renewable sources.

3. Predictive Maintenance and Energy Efficiency

- Predictive Maintenance: AI predicts equipment failures, preventing unplanned downtime. Well-maintained machinery operates more efficiently, reducing energy waste.

- Energy-Efficient Equipment: AI identifies energy-hungry equipment and suggests upgrades or replacements. For instance: replacing old motors with energy-efficient ones, installing variable frequency drives (VFDs) to optimize motor speed, and others.

4. Demand Response and Load Shifting

- Demand Response Programs: AI participates in utility demand response programs. When the grid is stressed, the factory reduces non-essential loads or switches to backup power.

- Load Shifting: AI shifts energy-intensive processes to off-peak hours. For example: running heavy machinery during nighttime when electricity rates are lower, charging electric forklifts during off-peak hours, etc.

Benefits and Dollar Savings

- Reduced Energy Bills: By optimizing energy usage, factories save on electricity costs.

- Carbon Footprint Reduction: Efficient energy management leads to lower greenhouse gas emissions.

- Operational Efficiency: Fewer breakdowns and smoother operations improve overall productivity.

- Example: A smart factory in Ohio reduced its energy costs by 15% through AI-driven energy management, resulting in annual savings of $500,000.

AI and IoT empower smart factories to make data-driven decisions, minimize waste, and contribute to a more sustainable future. Dollar savings, environmental benefits, and operational efficiency go hand in hand.

Moreover, implementing automated visual inspection and AI-driven predictive maintenance in factories enables more benefits like:

Reduced Downtime:

- Predictive Maintenance: By identifying potential equipment failures before they occur, factories can schedule maintenance during planned downtime. This minimizes unplanned interruptions and keeps production lines running smoothly.

Enhanced Quality Control:

- Automated Visual Inspection: AI-powered systems detect defects, inconsistencies, or deviations in real-time. This ensures that only high-quality products reach the market.

- Cost Savings: Fewer defective products mean less waste and rework, leading to cost savings.

Optimized Resource Allocation:

- Energy Efficiency: AI analyzes energy consumption patterns and suggests adjustments. Factories can allocate resources (such as electricity, water, and raw materials) more efficiently.

- Resource Cost Reduction: By using resources judiciously, factories reduce expenses.

Improved Safety:

- Predictive Maintenance: Well-maintained machinery is less likely to malfunction, reducing safety risks for workers.

- Visual Inspection: Detecting safety hazards (e.g., loose bolts, and faulty wiring) prevents accidents.

Streamlined Inventory Management:

- Predictive Maintenance: AI predicts spare part requirements. Factories maintain optimal inventory levels, avoiding overstocking or stockouts.

- Cost Savings: Efficient inventory management reduces storage costs and ensures timely replacements.

Better Workforce Utilization:

- Predictive Maintenance: Workers focus on value-added tasks instead of emergency repairs.

- Visual Inspection: Skilled workers can focus on complex inspections, while AI handles routine checks.

Reduced Environmental Impact:

- Energy Efficiency: By optimizing energy usage, factories contribute to sustainability goals and reduce their carbon footprint.

- Waste Reduction: Fewer defects mean less waste, benefiting the environment.

Smart factories leverage technologies like AI, IoT, and advanced robotics to optimize efficiency, quality, and competitiveness. Benefits include reduced downtime, increased operational efficiency, improved product quality, enhanced worker safety, and greater flexibility in responding to market demands.

These technologies not only enhance efficiency but also lead to tangible cost savings, improved safety, and a more sustainable manufacturing ecosystem. Overall, the adoption of smart factory technologies leads to tangible benefits, cost savings, and operational improvements.

Beyond the Factory Floor: AI in Back Office Automation

While AI plays a crucial role on the factory floor, its impact extends beyond production lines. Back-office automation is equally vital. Here are a few examples:

- Financial Analysis: AI analyzes financial data, detects anomalies, and predicts market trends. CFOs and financial analysts benefit from faster insights and better decision-making.

- Contract Review: Legal teams use generative AI to review contracts, identifying potential issues more accurately.

- HR Automation: Generative AI streamlines employee onboarding and many other tasks, once performed by HR teams. These include benefits administration, payroll processing, chatbots handling routine HR inquiries, etc.

- Document Processing: AI automates data entry, invoice processing, e-mail management, so administrative teams can focus on higher-value work.

In summary, AI transforms not only the factory floor but also the entire organizational ecosystem.

Learn more:

- What Is a Smart Factory? | NetSuite

- The smart factory (deloitte.com)

- DUP_The-smart-factory.pdf (deloitte.com)

- Micromachines | Free Full-Text | Artificial Intelligence-Based Smart Quality Inspection for Manufacturing (mdpi.com)

- Inspection robot basics: A 101 guide to automated quality assurance — Standard Bots

- The Future of Manufacturing: Integrating Cobots and AI for Quality Inspection | Quality Digest

- Role of IoT in Predictive Maintenance: Things to Know (intuz.com)

- IoT Predictive Maintenance | AspenTech

- IoT Predictive Maintenance: Components, Use Cases & Benefits (xyte.io)

- AI, Machine Learning and the Future of the Back Office (artsyltech.com)

- How AI Consulting Can Help Businesses to Automate Their Back-office Operations | RTS Labs

- 5 ways generative AI could change back-office operations | Pega

- 43 Back Office Automation Examples in 2024: RPA, WLA, AI/ML (aimultiple.com)

Smart Factories: Concepts and Features was originally published in Becoming Human: Artificial Intelligence Magazine on Medium, where people are continuing the conversation by highlighting and responding to this story.